Introduction:

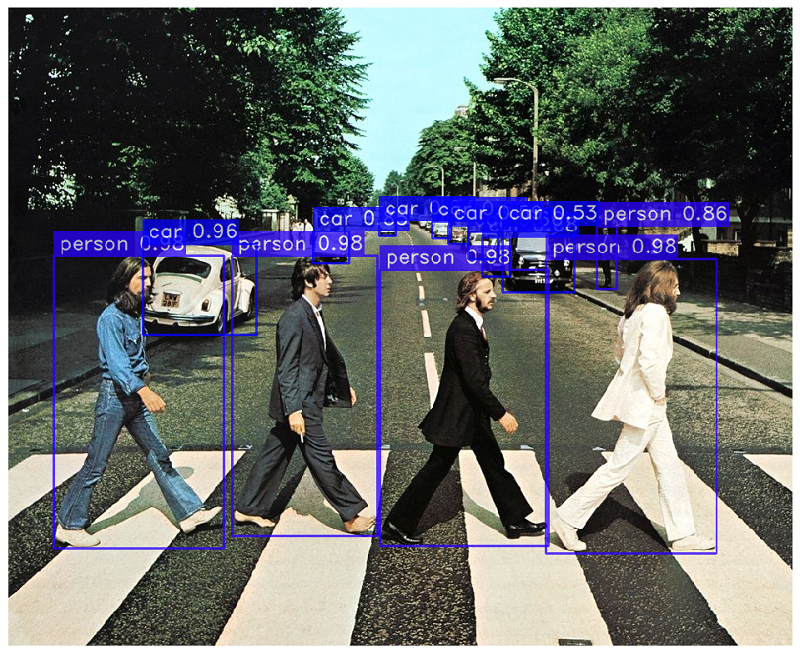

Pose estimation is a computer vision task that involves determining the location of key body points, such as joints, in an image or video. It has a wide range of applications, including robotics, augmented reality, and human-computer interaction.

Accurate and efficient pose estimation models are essential for many applications. Traditional pose estimation methods often rely on hand-crafted features and complex algorithms, which can be computationally expensive and may not perform well on a wide range of data.

YOLO-NAS Pose is a powerful and efficient pose estimation model that is based on the YOLOv8 object detection framework. It uses a neural architecture search (NAS) algorithm to automatically design the optimal architecture for the task. This makes it more accurate and efficient than traditional methods.

YOLO-NAS Pose Architecture

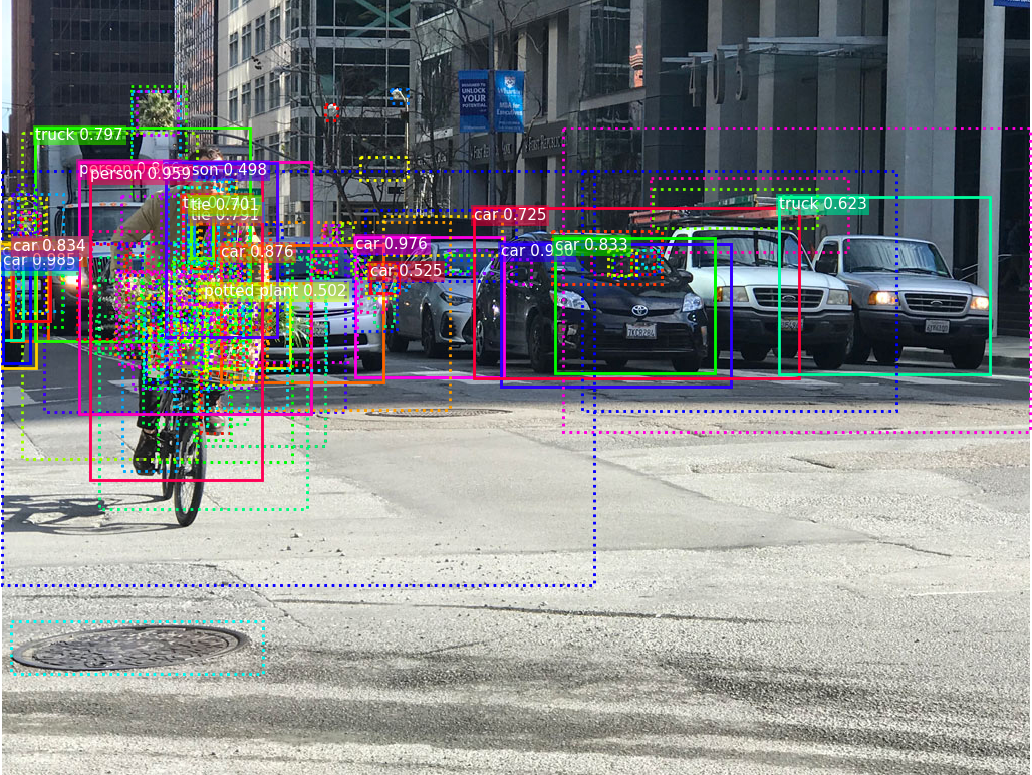

YOLO-NAS Pose is based on the YOLOv8 object detection framework. It consists of a backbone network, a neck, and a head.

The backbone network is responsible for extracting features from the input image. The neck is responsible for combining these features and generating a feature map that is suitable for the pose estimation task. The head is responsible for predicting the location of key body points in the feature map.

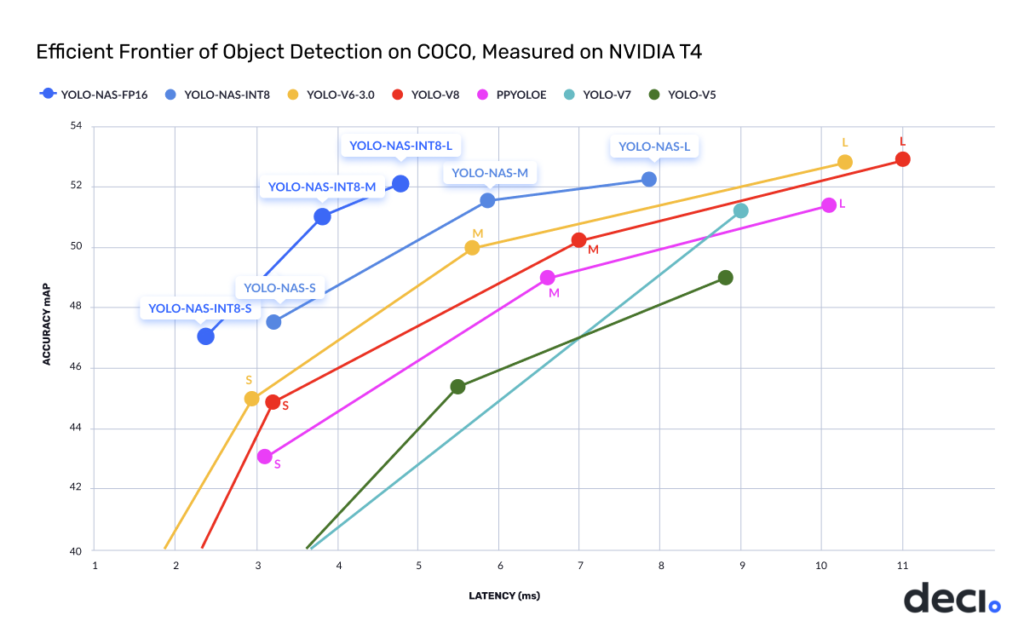

YOLO-NAS Pose uses a NAS algorithm to automatically design the optimal architecture for the task. This means that the model is not hand-crafted, but is instead designed by a computer program that searches for the best architecture based on a set of criteria. This makes YOLO-NAS Pose more accurate and efficient than traditional methods.

YOLO-NAS Pose Training

YOLO-NAS Pose can be trained on a variety of datasets. The training process is relatively straightforward and can be done using standard deep learning frameworks such as PyTorch and TensorFlow.

The training process typically involves the following steps:

- Data preparation: The training dataset needs to be prepared by labeling the location of key body points in each image or video.

- Model training: The YOLO-NAS Pose model is trained on the prepared dataset. The training process involves optimizing the model’s parameters to minimize the loss function.

- Model evaluation: The trained model is evaluated on a held-out dataset to assess its performance.

YOLO-NAS Pose Evaluation

YOLO-NAS Pose has been evaluated on a variety of benchmark datasets, including COCO17, MPII, and Human3.6M. It has been shown to outperform other state-of-the-art pose estimation models on all of these datasets.

For example, on the COCO17 dataset, YOLO-NAS Pose achieves an AP@0.5 of 82.9%, which is significantly higher than the AP@0.5 of 74.6% achieved by the previous state-of-the-art model, OpenPose.

Applications of YOLO-NAS Pose

OLO-NAS Pose has a wide range of applications, including:

- Robotics: YOLO-NAS Pose can be used to control robots by tracking their movements and the movements of objects in their environment.

- Augmented reality: YOLO-NAS Pose can be used to overlay virtual objects onto real-world scenes.

- Human-computer interaction: YOLO-NAS Pose can be used to control computers using gestures or facial expressions.